You don't understand AI Agents

It's everywhere. We're all building one. We're hearing every day crazy valuations from companies who claim they're building the best AI agent in sales, H.R, finance, etc, etc... But what are AI agents? Why do they matter?

And why do I think most engineers and product leaders don't understand them?

AutoGPT, the first AI agent

I distinctly remember the day AutoGPT was released. I cloned the project, ran a command, and saw something like this:

This was mind-blowing. I was looking at a proactive and autonomous machine.

I became obsessed with this type of software. Initially, I called it "chat with side effects", because I was able to communicate with a machine (like ChatGPT) but also have it take action for me.

AutoGPT was the first AI agent.

Before chatGPT: Traditional applications

To understand AI agents, we first need to understand traditional software. Up until April 2023 and the release of GPT-4, it wasn't common for users to expect their application to be proactive and take action for them.

Users would interact with web applications through a very explicit user interface.

And this was great. But we also spent a lot of time doing the same thing over and over in a user interface.

To solve this problem, we used software engineers. They would write business logic to translate our day to day tasks into workflows. Then we would just click a button and everything would happen automatically, somewhere in the cloud.

But not everyone could hire engineers. And sometimes you would just need to do something once and there was no point asking an engineer for this.

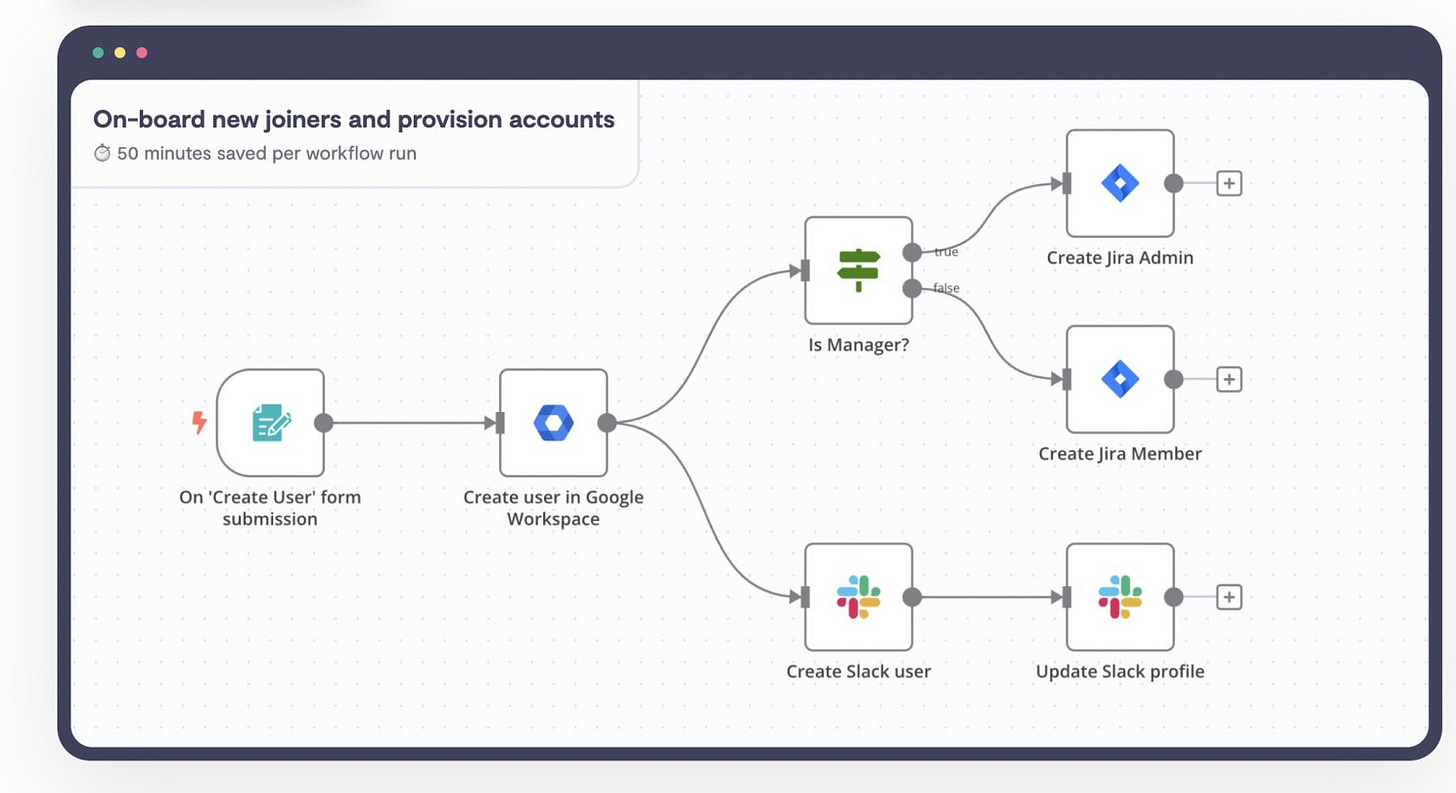

Fortunately, we also had workflow engines. Things like @zapier @MuleSoft @n8n_io @WindmillDev

Thanks to them, we could use relatively low-code interfaces to build these workflows.

But it still felt off because these interfaces remained pretty technical. And most knowledge workers didn't know how to use them well. Also, this still didn't make sense for tasks done only once.

Overall, automating manual tasks had always been the holy grail of software.

Background: why full automation kept slipping away

2017 | Scraping in the “wild-west” era

Lead-generation contractors routinely built pipelines that scraped LinkedIn, which was then lax enough to allow easy data mining. The main bottleneck wasn’t code; it was the swarm of small, repetitive chores—clean-ups, hand-edits, customer emails—that never quite disappeared. A single UI tweak by LinkedIn broke many of those scrapers overnight, and the manual catch-up work overwhelmed more than a few solo operators. The lesson was clear: brittle scripts plus human glue do not scale.

Nov 2022 → Apr 2023 | From scripted workflows to agentic thinking

text-davinci-003: Engineers experimenting inside firms such as Redica treated early GPT models as smarter autocompleters. Helpful, but still prone to drift if you tried chaining outputs back into inputs.

GPT-3.5: Reliability jumped, prompting became easier, yet any self-loop longer than a few hops still collapsed. The industry’s unsolved wish remained: a system that writes its own finite-state machine in real time.

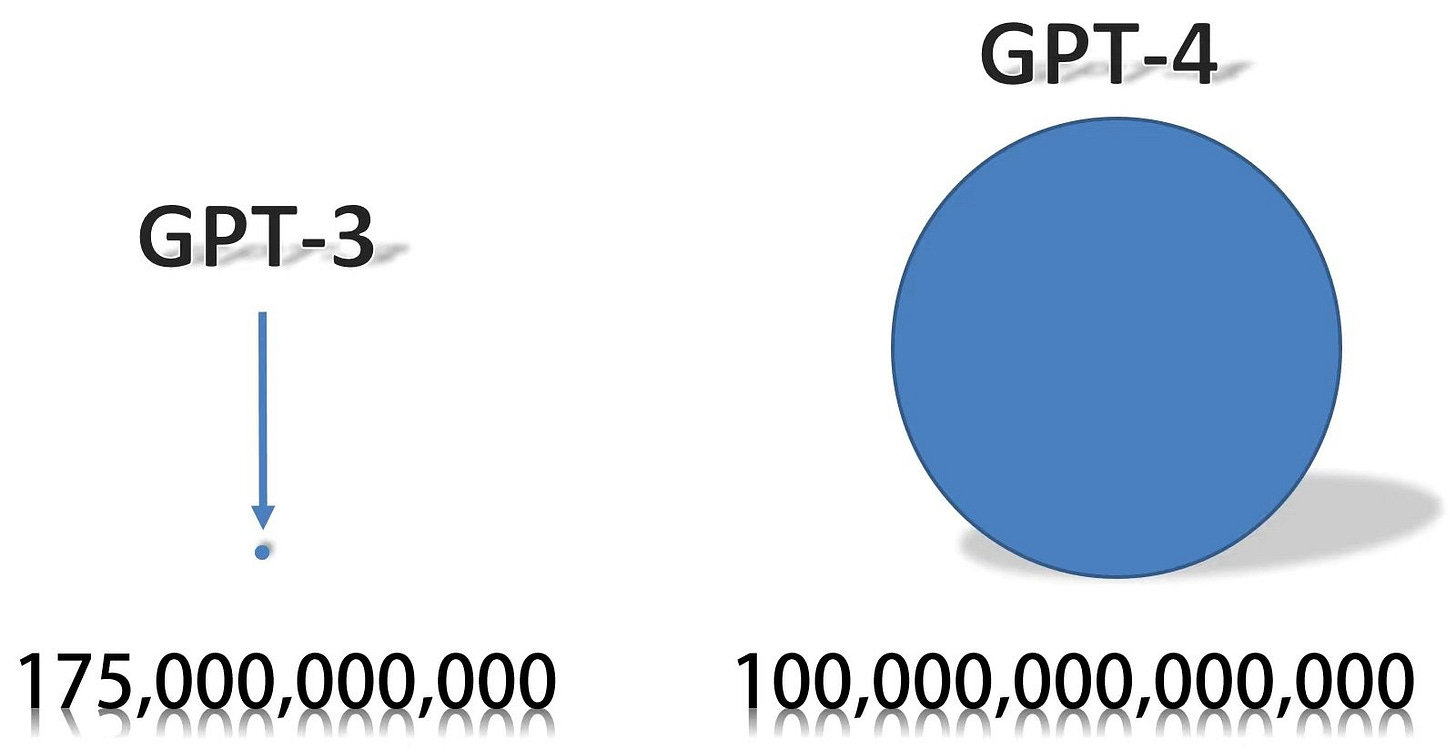

14 Mar 2023 | GPT-4 changes the physics

The 0314 checkpoint of GPT-4 delivered an abrupt coherence boost. Prompts simplified rather than ballooned, and multi-step reasoning held together across long chains. Practitioners moved toy projects over in days—regressions essentially vanished—fueling talk that “Jarvis-level” assistants were finally within reach.

These milestones set the stage for today’s agentic landscape and frame why “systems that decide and act” are treated as the next big platform shift rather than just another UX fad.

What is an AI agent?

Here is my definition of an AI agent. At the end of this article, I will explain why I use this definition:

In the context of AI systems, an agent is a system that can act autonomously and proactively.

Let's take an example to understand why agents matter.

Example: Anna is not getting the right candidates for a role.

We're not going to talk about RAG, memory, multi-agents, loops, LLMs, AI, tree of thoughts.

We don't need any of that to illustrate AI agents. All we need is to talk about what I have always loved and the reason why I am a software engineer in the first place: business processes

We're going to talk about Anna.

Anna is a recruiter at Coca-Cola. She's looking for a senior product manager to join their team in their New York office. But Anna has a problem: she's only receiving applications from inexperienced candidates.

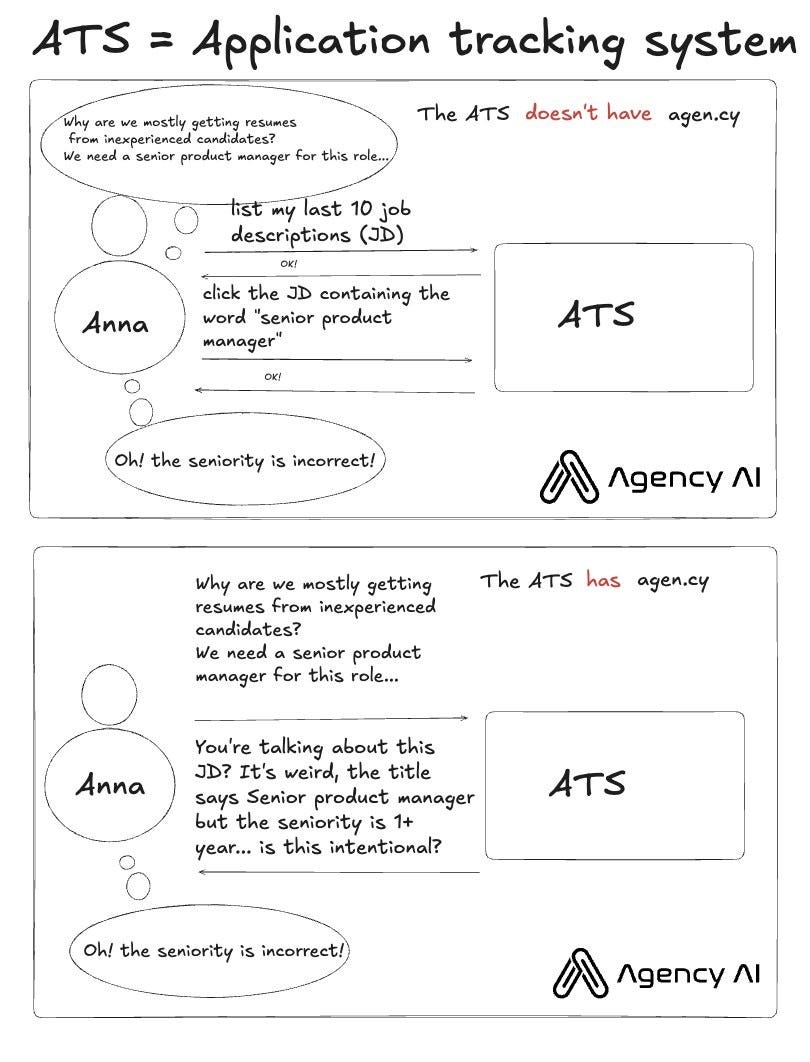

Before AI agents, Anna would just use the ATS to troubleshoot the issue. She would never tell the ATS about the issue, because it's a waste of time: the ATS would not be very helpful.

But when the ATS is agentic, Anna can express her high level problems and let the ATS troubleshoot the issue then come back with the diagnostic.

Anna is now able to troubleshoot the problem without manually interacting with the ATS. She's saving time.

In this example the ATS is an agent.

This improvement is already valuable on its own. But there is no limit to agency. The ATS could have found the seniority issue before Anna identified it, troubleshoot it, fix it, then report back to Anna. This would save her even more time (but too much autonomy is not always good, see below).

Focus on Anna, not the technology

Here is the least interesting question to ask at this point:

How to make ATS agentic?

The answer is: "it depends". Everyone builds these agents differently. Besides, you might not even need AI to have agency. A finite state machine can do the job sometimes. That's why I don't like the term "AI agents". "Agents" make more sense.

The more interesting questions are:

A- What made Anna realize there was an issue?

B- How does she know the diagnostic is accurate?

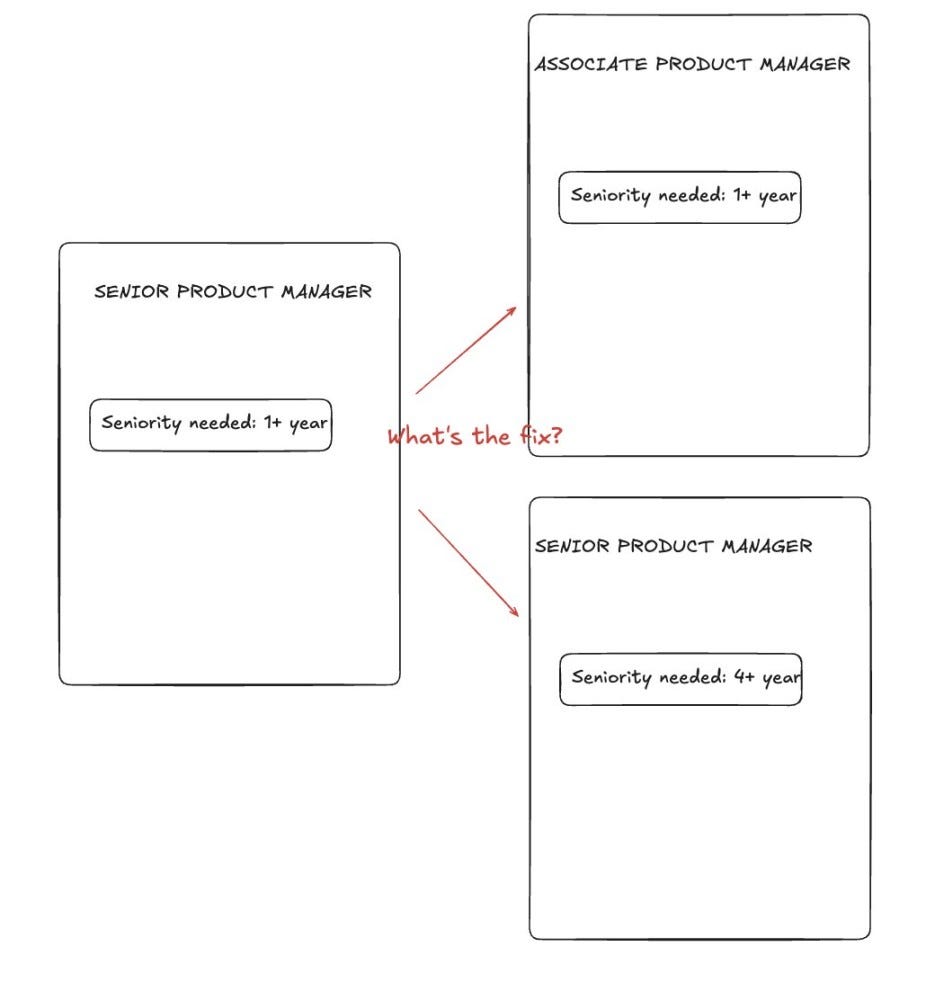

C- How does she know the fix is to change the seniority field and not the job title? Think about it: she knows the job description was for a senior engineer. But if we wanted to automate her work fully, we would need to know her initial intent.

The answers to these 3 questions are 3 evals:

A- If more than 40% of the candidates applying for a job description mentioning "senior" in the title are junior, then Anna should report an incident.

B- If the diagnostic mentions a mismatch between two fields and there is no mismatch between these 2 fields, then Anna should not be happy with the diagnostic. And in the opposite case, she should be happy with the diagnosis.

C- If the diagnostic is a mismatch between two fields, the agent should ask Anna to intervene, and it should keep alerting Anna, unless she declines.

We're now much more likely to build the right agent, because we can benchmark it by running these 3 evals.

This is why the most important thing to understand in this agentic revolution is not agentic frameworks. It's Anna. Writing evals will force you to understand Anna better than anyone else. And if you understand Anna, you will build the best agent. Because the best agents are just machines with the same reward system as Anna.

Side note: C is the only eval where we're forced to talk about the agent, because Anna and the agent would behave differently. Anna knows what the fix is, but for the agent, it's harder to know. So we decide to have the agent put Anna in the loop. One might argue there are ways to know what to fix, because there should be signs of seniority vs juniority. If the job desc is clearly for a junior, we change the job title, else, we change the seniority field. But this is the point where you should know Anna. What's the most useful eval to pass for her agent? I would argue A. and B. She can very easily edit the job description if she agrees with the diagnostic. So is it really worth it for you to automate this part and to even write this eval in the first place? This highlights an important point: the eval C. should probably rank lower on the benchmark. Because I would rather have an agent pass A. and B. than A. and C. Hopefully this also allows you to understand the difference between an eval and a benchmark.

Conclusion

AI agents are systems that act autonomously or proactively. The least interesting aspect about AI agents is the technology. The most interesting aspect about AI agents is Anna.

Anna is an end user trying to follow a business process.

Anna already has agency. Anna is proactive and has autonomy. We don't need to "create agency" out of thin air. We just need to create a bot version of Anna a.k.a an agent. Anna is the reward system.

You don't understand agents until you understand what success means for Anna and the organization she works for, a.k.a evals.

Why should you care about AI agents?

I care about AI agents because I am Anna. And maybe you are like me, too. Maybe you're frustrated by all the manual tasks you do on a day to day basis.

But a more pragmatic reason to care about AI agents is that Anna is expensive. And if your competitors build Anna, they will beat you.

Am I saying that AI agents only make you save money? No. A lot of companies can't hire Anna in the first place. So if they decide to buy a cheaper version, they will end up spending more money in exchange for the value delivered.

Am I saying AI agents can only do things that humans currently do? No. But it's easier to start with business processes that humans already do. Generating business processes from existing patterns is harder.

Am I saying that "human Anna" will become useless? No, we need to put her in the loop so she can assess whether the agent behaved correctly or not. And even if the agent is performing all the things she used to do, we will probably still need human Anna in order to monitor these agents.

But it's possible that this increase in productivity leads to job displacement, because if Anna is "too productive", her colleague might be let go, because there is nothing left to do.

Why this definition?

I skipped quickly on the definition because I want this article to be light and clear to understand. Definitions are always very academic. But they obviously matter a lot. So let's come back to this definition:

In the context of AI systems, an agent is a system that can act autonomously and proactively.

First let's try to use the dictionary. "Agent" already has a meaning. Ideally we don't create a new meaning for this word, if I don't have to. Unfortunately I think we have to.

Because according to the Merriem-Webster dictionary, agency is the capacity, condition, or state of acting or of exerting power. So you could say that an agent is "a system able to act". But it's very vague.

A very stupid web server can also "act" and insert something in a database if it's called by a web client. So "capacity of acting" is not enough, in the context of software applications.

The key in agentic systems we see today is their ability to act autonomously.

But there is a problem with autonomy. For example, when I was using AutoGPT, my favorite mode was the manual mode where I could nudge AutoGPT in the right direction at every cycle and make it change its course. The autonomous mode was not very useful because either way, I was looking at the terminal, since AutoGPT would frequently make mistakes. So I might as well control what's going on. There is no downsides.

These days, agents are not accurate enough to afford to be autonomous. But once we hit a certain threshold of accuracy, then autonomous agents will dominate.

This why I added proactivity to the definition, because I can see the most useful AI agents today are proactive but not autonomous.

This is why I use this definition of agents:

In the context of AI systems, an agent is a system that can act autonomously and proactively.

The other definitions of AI agents

The definition of AI agents that rely on specific technologies or design patterns are not very useful in my opinion. Yes, AI agents happen to use LLMs. Sometimes they use multiple agents, vector search, RAG, goal decomposition.

But when you build an agent, you shouldn't focus too much on these techniques. You biggest risk is to ignore Anna, not to ignore common agentic patterns.

The best agent builders I worked with were simply outcome oriented and very practical. And yes, they end up finding reproducible techniques and sharing their frameworks to the world.

But these techniques might not work for you, so the only guaranteed way for you to succeed is by focusing on Anna. And to do this, you should build the part of Anna that knows what success means for her and her organization, a.k.a evals.

The good news is that we're all Annas, and we all have itches to scratch.